I was running out of space on my old NAS, so, instead of doing the sensible thing of just buying larger drives, I decided to buy larger drives and build a new storage server.

While that is a little ridiculous, I think this actually works out better in the long-run. Before I make that argument, let's go through this pile of parts.

The build

a pile of parts I probably spent way too much on

What we have here is

- 12TB WD Red Plus HDDs (x4)

- 2TB Samsung 870 EVO SSDs (x4)

- 1TB Crucial P3 Plus NVMe SSD

- 128 GB of registered ECC DDR4-2400 memory (4x 32 GB)

- ConnectX-3 10G EN

- Supermicro A2SDi-8C+-HLN4F

- Corsair RM750x PSU

- 80mm Noctua NF-A8 (x5)

- RackChoice 2U 8-bay chassis

The motherboard/CPU choice is a little odd here, but I'm hoping this will end up using less power than the old system. The CPU embedded on this board is an 8-core Atom C3758 with a 25w TDP. Powered off, with only the IPMI active, I measured about 5.7 watts at the wall. With all drives active, 5 fans, a 10G card, and all RAM slots populated, I'm measuring 65 watts at the wall. I assume the idle power draw will be lower with only a subset of the drives active. I'll note that, during the initial spin up and boot, power draw does peak at a little over 100 watts, but this is brief.

Because I'm pretty much only going to be using this as storage (my kubernetes cluster and proxmox cluster is external), the C3758 is plenty of power. The RAM is probably overkill, but the difference in cost between the 64 GB and 128 GB was small enough that it was hard to justify getting less. The motherboard actually supports 256 GB of registered ECC, which seems even more ridiculous to pair with this CPU. Regardless, the amount of RAM and number of sticks is contributing to the slightly higher-than-anticipated power draw.

Perhaps the most important feature of the A2SDi-8C+-HLN4F (and the higher core-count variants) is the support for 12 SATA drives (4 ports + 2 SFF-8643 headers), 1 NVMe drive, and a PCIe 3.0 x 4 slot. There is a variant of this board with 10 G NICs, as well as a more expensive 16-core C3958 variant that has an on-board SFP+ slot, but this seemed to be the best bang-for-the-buck, considering I won't need a HBA to connect all the drives and I already had a ConnectX-3 lying around for the 10G connectivity this board is missing (it has 4 1G NICs).

The PSU is overkill and I could probably do better with a more efficient, smaller PSU or a pico-PSU, but the RM750x is modular to help with the cable clutter, is actually reasonably efficient at low power draw, and, since I have a bunch of similar PSUs, I had a ton of the 4-pin molex modular cables lying around (the backplane "requires" 3 molex connections).

As for the drives, my plan is to have two effective pools of storage, with the 870 EVOs offering "fast" storage and the 12TB drives for longer-term or more write-heavy storage. The 1TB NVMe drive will serve as a write-cache for the HDD pool. The selection of the 12TB WD Red Plus drives was made mostly with noise as a consideration, as these are nearly silent against the ambient noise in my office. Compare that to the 12TB IronWolf drives I have that are relative noisy.

If I have to be honest, the chassis is pretty bad. While the chassis itself isn't flimsy, the drive trays are both flimsy and ill-fitting, and because they're non-rigid metal, I had to insulate the exposed PCBs on the HDDs from making contact with the bottom surface of the trays by using a folded piece of paper. Most annoyingly, the alignment of the drive connectors with the default mounting location of the backplane is a little suspect, and I had trouble getting all of the drives to seat without using a bit of jiggling and force.

flimsy trays

It did come with fans, but I swapped those out for 80mm Noctua NF-A8s, which are quieter, though they move slightly less air.

stock fans

The backplane has fan headers, but offers no speed control, so I'll be relying on the motherboard's 3 usable fan headers (CPU fan uses the 4th) to drive all five NF-A8s (with splitters).

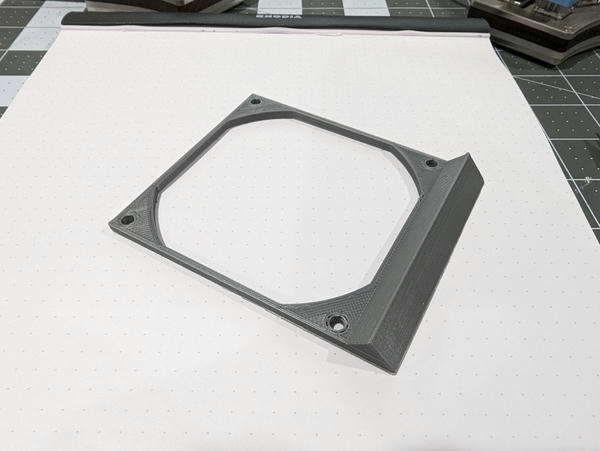

A keen-eyed observer would note that the chassis only has mounting points for four 80mm fans, so we're going to have to do something about the fifth fan, which I plan to use to force air over the NVMe drive and the ConnectX-3. It's probably unnecessary, but I had a fifth fan, and it was an excuse to make this:

3d-printed, angled fan bracket

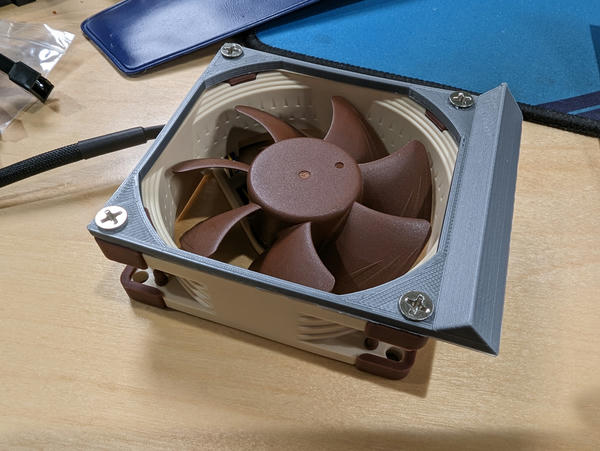

Which mounts to a NF-A8 like so (the screws that come with the fans are already thread-cutting, so I sized the mounting holes such that the screws would also cut threads in those).

mounted to the NF-A8

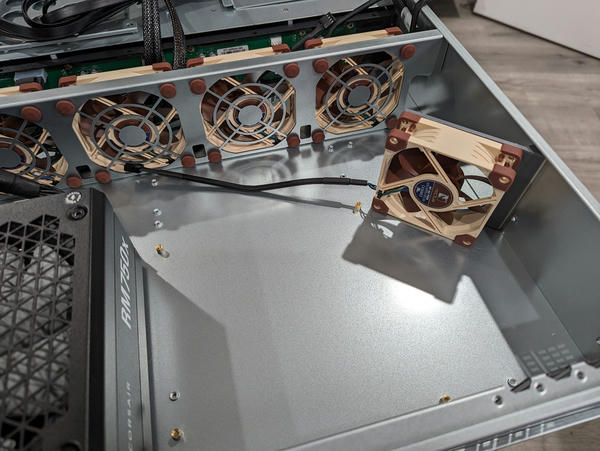

And the assembly mounts to the inner wall of the chassis via a double-sided adhesive.

install location in chassis

The surface finish isn't great, and it's nothing particularly unique, but I'll take any excuse to make something functional with my 3D printer.

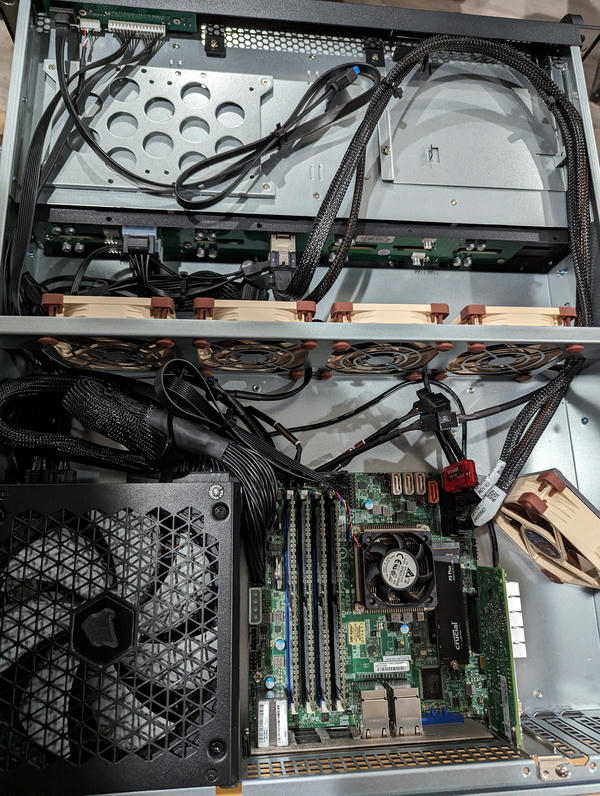

With that out of the way, here are some photos of the installed components and my passable cable-management.

assembled

mostly clean

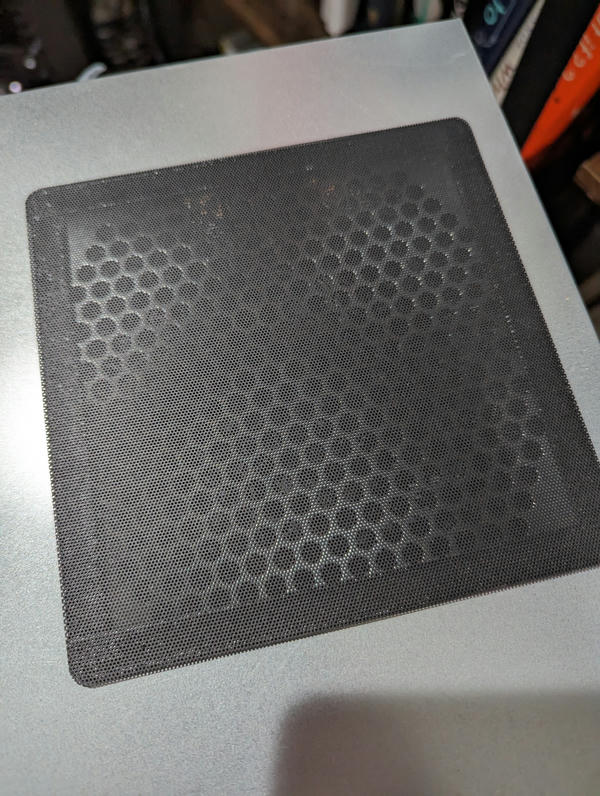

Because of the 2U form-factor, the fan of a modern ATX power supply has to pull in air through the lid of the chassis. I did search for an industrial power supply with front-to-back airflow, but could not find one that seemed reliable and was cheaper than the RM750x. The chassis has ventilation holes cut for the PSU fan, and, since it's facing up, I added a magnetic dust filter to offer some dust protection.

an added magnetic dust filter

This does mean whatever is mounted above this in the rack needs to be shorter depth, so the plan is to put this close to the top (under the switches).

An unexpected hiccup was that the unique IPMI password printed on the

motherboard did not work, and I had to make an ubuntu live USB to run

Supermicro's IPMICFG tool to reset the password. I'm assuming the seller did

not, in fact, have a new motherboard for sale, but perhaps a previously used

one. The only other explanation is a mixup with the password provided by

Supermicro.

Once that was sorted, I could use ipmitool to set the fan sensor thresholds,

since the motherboard expects much higher RPM fans and would spin all fans up to

100% because it assumed the NF-A8s had failed. This was simple enough:

ipmitool -I lan -U ADMIN -H [host] sensor thresh FAN1 lower 150 250 300

ipmitool -I lan -U ADMIN -H [host] sensor thresh FAN2 lower 150 250 300

ipmitool -I lan -U ADMIN -H [host] sensor thresh FANA lower 150 250 300

Yes, the fans are FAN1, FAN2, FAN3 (CPU), and FANA.

After this, it was just a matter of running a (very long) memtest and firing up Unraid. You'll note that this is taking place out of the rack, since it's easier for me to make changes and monitor the power consumption this way.

parity sync and stability testing

As far as airflow goes, with the motherboard controlling the fan speeds, the hottest I've seen the 3.5 inch drives get under sustained load is 41 C, which is acceptable if a little on warm side. From a noise perspective, it's quieter than I expected, as in it's barely audible. It's definitely quieter than the 1U firewall sitting above it.

The OS/software

For this build, I've decided to go for Unraid not because I plan on running VMs or containers on the server (though having that option is nice), but rather that I'm betting that having the HDD array not being a traditional RAID array will cut down on the overall power usage because reads and writes will require spinning fewer disks up (because the data isn't striped). This has the obvious downside of limiting my read speeds to the speed of any one drive (writes should leverage the NVMe cache), but this is probably worth it to me if I'm mostly using this for media storage. The speed of a single drive is more than enough for that. The additional hope is that, in the event of losing two drives I can still recover the data on the remaining drive(s) because the data isn't striped.

If I need faster storage, my plan is to run the 4 2TB EVOs in a RAIDZ1 pool, keeping in mind that the SSD durability could become an issue, but we'll have to see how that goes. This feels like the best compromise between durable, dense storage for large media files while also providing faster, perhaps less-durable, storage for kubernetes PVCs or VM storage or other specific file shares. The SSDs also have the benefit of consuming way less power than the spinning drives. Creative use of rsync and whatnot might give me a tiered storage scheme (beyond what mover can give me), but I don't know how much I want to invest in that.

The plan is to use this new machine as the primary media storage and backup device, and transition the existing NAS to a backup of this server, with that then storing important things in a third backup off-site in Glacier or some Backblaze solution. This should mean that the existing NAS mostly has its drives spun down, and, if I'm watching something via Jellyfin, I only have one HDD spun up instead of all six in the existing NAS.

I realize that there are other solutions out there, some requiring more work than others, but Unraid seems like the right call, despite me not planning on taking advantage of the other major features like VMs and apps and whatnot. If I didn't already have a separate kubernetes cluster and whatnot, it might be tempting, but I'm not the biggest fan of hyper-converged setups.

Time will tell, I suppose.

Here's the racked result:

rack mounted

The future

Originally, I had my eyes set on a different motherboard that had support for two NVMe drives, which would have allowed me to have a mirrored cache. Unfortunately, the Supermicro board only has a single slot. I may decide to replace the current 1TB NVMe with a 4TB one if prices drop enough, as it represents a bottleneck in capacity to daily writes to the array. Since it'll mostly be buffering media that I can semi-easily recover, I'm less concerned about durability for that particular write cache.

If, at some point, Unraid adds read caching, I'll have to revisit my plans here. Thankfully, the motherboard does have 4 remaining SATA ports and the case supports another four 2.5-inch drives via internal mounting points, so there's always the possibility of using a slower SATA SSD as a read cache if it's ever supported.

Lastly, I need to figure out my monitoring plan. I run node_exporter on my

other machines (for prometheus), so I'd like to do something similar on this.

One thing I'll have to ensure is that, regardless of monitoring tool, I want to

prevent the monitoring from triggering drive spin-ups.