Several months ago, a server I'd be using for nearly a decade decided to call it quits. I made plans to replace that machine by building a new one with modern hardware, but those plans quickly spiraled into redoing most of my cluster.

My old setup was mostly containerized, with most services managed with docker compose and spread across two machines. Services were reachable on their specific hosts at their specified ports.

For the replacement machine, I went with a desktop form-factor because I had the case and I had an old, full-sized GPU lying around that needed a new home. I went with a 12600K instead of the 12700 or higher for reasons of sales/cost, and the thought that I could swap the CPU out later, if necessary. My one splurge was on the 128 GB of RAM (normal desktop RAM, not ECC). For storage, a 1TB Samsung 980 Pro NVMe drive would be the main boot/OS drive and supplementing that would be four more terabytes of SSD storage. And two additional terabytes of spinning disks (drives I had lying around). The storage was mostly unnecessary, since I also have a NAS as my primary source of shared, durable storage. Where the SSDs do help is fast backing for persistent volume claims.

the interior of the primary node

Kubernetes

Instead of recreating the docker compose setup, I opted for a k3s deployment (I'm currently weighing whether to move the control plane to a HA'd setup). Going this route would allow me treat the hardware I have as a more generic pool of compute, while also allowing me to have a setup much more similar to the ones I'm familiar with at work. I provide load balancer functionality via metalLB, with two traefik instances as my ingress controllers (one internal, one external). Local DNS resolution is currently handled by hard-coded routes in my pi-hole, configured to point at the internal traefik address. For external resolution, I'm running a pod that periodically updates my DNS records in cloudflare. For certificates, I'm just going the usual cert-manager route with certs from Let's Encrypt (with separate certs for the internal and external ingress). I also have the necessary extensions to take advantage of the GPU for workflows that require it.

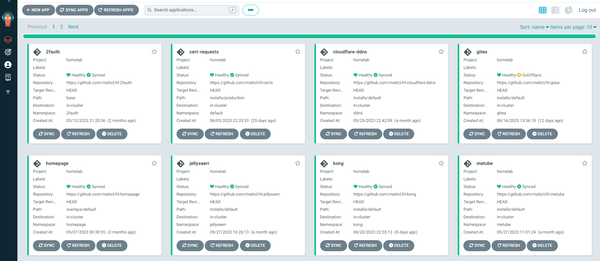

All services are deployed and configured via ArgoCD, with the goal of being able to recreate the cluster from code in the event of an outage that causes me to lose the control plane (or the eventual migration to a new setup).

the argocd dashboard

Being able to define new services by declaring kubernetes resources and handing those off to ArgoCD has reduced a lot of the operational overhead and effectively eliminated unicorn configurations (albeit at the expense of a more complicated environment). Since many things are built with kubernetes in mind, it's been nice to use off-the-shelf configurations instead of writing and managing my own compose files. Also nice is using the same tooling (k9s, prometheus, etc.) that I'm used to using in my day job.

There have been a few pain points. I need to work out the proper sequencing of powering up the various machines after a power failure. Since the NAS backs some of the volumes used by media-related services, I need to wait for that to become available before recovering the kubernetes cluster. Also, because local DNS resolution currently relies on the pi-hole (running on a different host), not having the resolution available causes some services that do not rely on internal kubernetes routing to fail.

New additions

I've also started building out a fleet of mini PCs that I'm using as build nodes for my Concourse server and Tailscale nodes (for certain external connectivity and peering to my Linode instances). At some point, my plan is to relocate the kubernetes control plane to three of the lower-power EQ12 variants that I own.

a Beelink SER6 Pro 7735HS

I will likely repurpose the oldest machine (well over a decade at this point) as the monitoring/metrics host, though I'll probably install some faster storage in it.

Overall, I've been happy with the way things turned out.

Future

My next major project will likely be redoing the network setup. I've been eyeing some Ubiquiti hardware, and, when I finally getting around to hard-wiring the house, I'll need a more capable switch than the one I currently have (and hopefully one with PoE for the access points). My current gateway also only supports 200 or so clients, and there's a possibility I exceed that number with all of the various devices.

Beyond that, I've been trying to figure out how to transition to a rack-based setup, for a variety of reasons (one of which being that it'd be harder to steal hardware actually bolted to things), but I would need to think about where to put the rack(s) in my house (cooling would also potentially be an issue).